Introduction

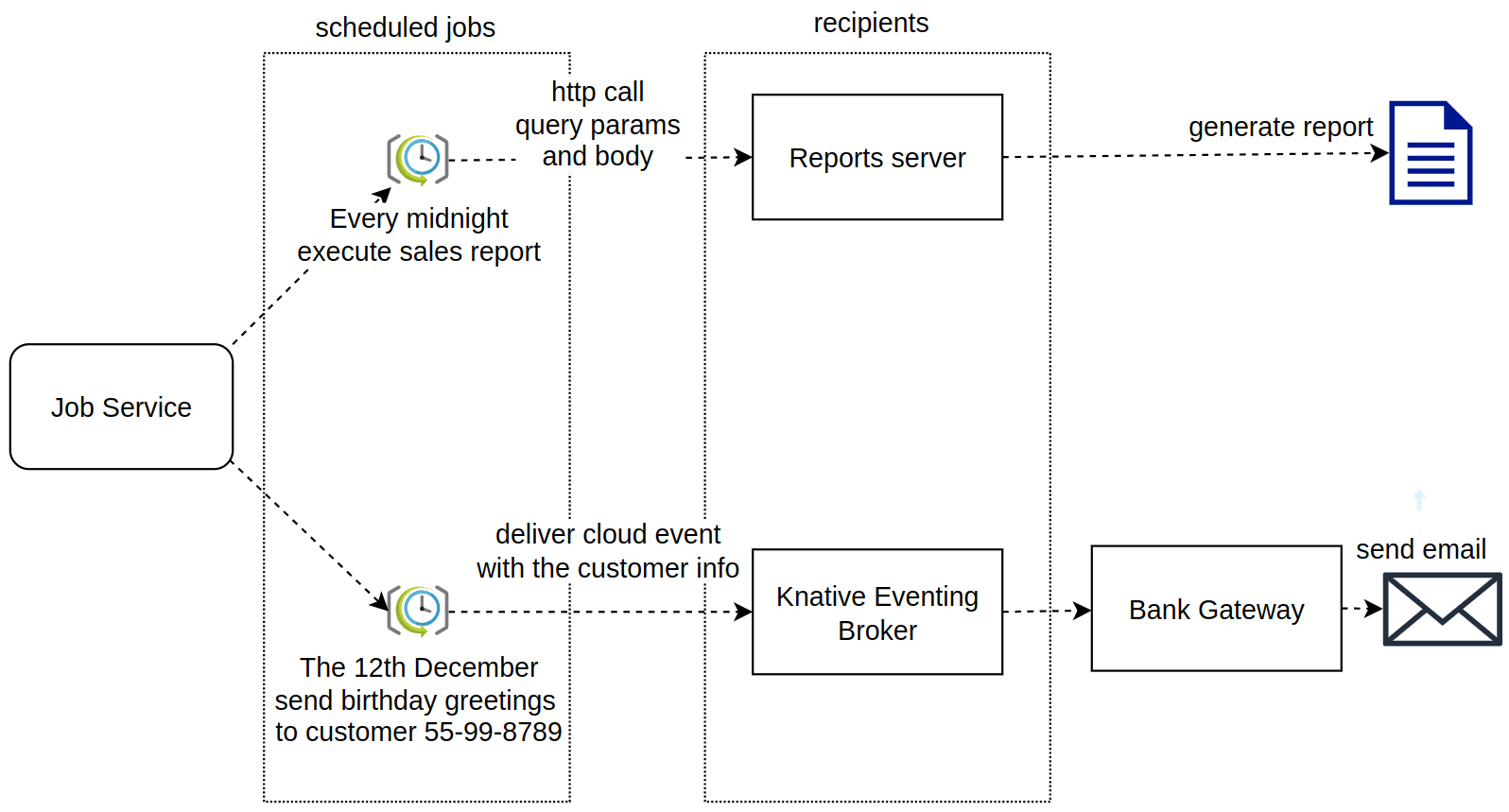

The Job Service facilitates the scheduled execution of tasks in a cloud environment. These tasks are implemented by independent services, and can be started by using any of the Job Service supported interaction modes, based on Http calls or Knative Events delivery.

To schedule the execution of a task you must create a Job, that is configured with the following information:

-

Schedule: the job triggering periodicity. -

Recipient: the entity that is called on the job execution for the given interaction mode, and receives the execution parameters.

Integration with the Workflows

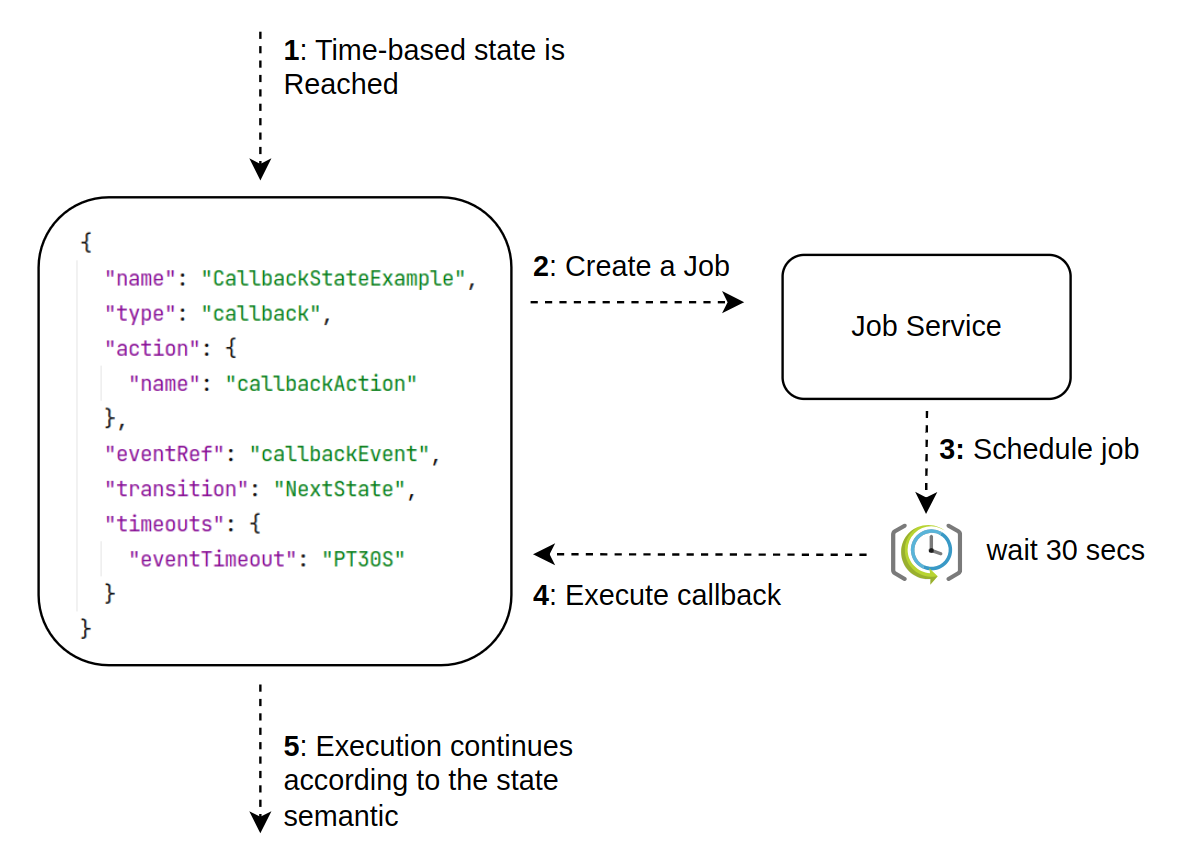

In the context of the Kogito Serverless Workflows, the Job Service is responsible for controlling the execution of the time-triggered actions. And thus, all the time-base states that you can use in a workflow, are handled by the interaction between the workflow and the Job Service.

For example, every time the workflow execution reaches a state with a configured timeout, a corresponding job is created in the Job Service, and when the timeout is met, a http callback is executed to notify the workflow.

To set up this integration you can use different communication alternatives, that must be configured by combining the Job Service and the Quarkus Workflow Project configurations.

|

If the project is not configured to use the Job Service, all time-based actions will use an in-memory implementation of that service. However, this setup must not be used in production, since every time the application is restarted, all the timers are lost. This last is not suited for serverless architectures, where the applications might scale to zero at any time, etc. |

Jobs life-span

Since the main goal of the Job Service is to work with the active jobs, such as the scheduled jobs that needs to be executed, when a job reaches a final state, it is removed from the Job Service. However, in some cases where you want to keep the information about the jobs in a permanent repository, you can configure the Job Service to produce status change events, that can be collected by the Data Index Service, where they can be indexed and made available by GraphQL queries.

Executing

To execute the Job Service in your docker or kubernetes environment, you must use the following image:

In the next topics you can see the different configuration parameters that you must use, for example, to configure the persistence mechanism, the eventing system, etc. More information on this image can be found here.

We recommend that you follow this procedure:

-

Identify the persistence mechanism to use and see the required configuration parameters.

-

Identify if the Eventing API is required for your needs and see the required configuration parameters.

-

Identify if the project containing your workflows is configured with the appropriate Job Service Quarkus Extension.

Finally, to run the image, you must use the environment variables exposed by the image, and other configurations that you can set using additional environment variables or using system properties with java like names.

Exposed environment variables

| Variable | Description |

|---|---|

|

Enable debug level of the image and its operations. |

|

Any of the following values: |

|

If used, these values must always be passed as environment variables. |

Using environment variables

To configure the image by using environment variables you must pass one environment variable per each parameter.

docker run -it -e JOBS_SERVICE_PERSISTENCE=postgresql -e VARIABLE_NAME=value quay.io/kiegroup/kogito-jobs-service-allinone:latestspec:

containers:

- name: jobs-service-postgresql

image: quay.io/kiegroup/kogito-jobs-service-allinone-nightly:latest

imagePullPolicy: Always

ports:

- containerPort: 8080

name: http

protocol: TCP

env:

# Set the image parameters as environment variables in the container definition.

- name: KUBERNETES_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: JOBS_SERVICE_PERSISTENCE

value: "postgresql"

- name: QUARKUS_DATASOURCE_USERNAME

value: postgres

- name: QUARKUS_DATASOURCE_PASSWORD

value: pass

- name: QUARKUS_DATASOURCE_JDBC_URL

value: jdbc:postgresql://timeouts-showcase-database:5432/postgres?currentSchema=jobs-service

- name: QUARKUS_DATASOURCE_REACTIVE_URL

value: postgresql://timeouts-showcase-database:5432/postgres?search_path=jobs-service|

This is the recommended approach when you execute the Job Service in kubernetes. The timeouts showcase example Quarkus Workflow Project with standalone services contains an example of this configuration, see. |

Using system properties with java like names

To configure the image by using system properties you must pass one property per parameter, however, in this case, all these properties are passed as part of a single environment with the name JAVA_OPTIONS.

docker run -it -e JOBS_SERVICE_PERSISTENCE=postgresql -e JAVA_OPTIONS='-Dmy.sys.prop1=value1 -Dmy.sys.prop2=value2' \

quay.io/kiegroup/kogito-jobs-service-allinone:latest|

I case that you need to convert a java like property name, to the corresponding environment variable name, to use the environment variables configuration alternative, you must apply the naming convention defined in the Quarkus Configuration Reference.

For example, the name |

Global configurations

Global configurations that affects the job execution retries, startup procedure, etc.

| Name | Description | Default |

|---|---|---|

|

A long value that defines the retry back-off time in milliseconds between job execution attempts, in case the execution fails. |

|

|

A long value that defines the maximum interval in milliseconds when retrying to execute jobs, in case the execution fails. |

|

| Name | Description | Default |

|---|---|---|

|

A long value that defines the retry back-off time in milliseconds between job execution attempts, in case the execution fails. |

|

|

A long value that defines the maximum interval in milliseconds when retrying to execute jobs, in case the execution fails. |

|

Persistence

An important configuration aspect of the Job Service is the persistence mechanism, it is where all the jobs information is stored, and guarantees no information is lost upon service restarts.

The Job Service image is shipped with the PostgreSQL, Ephemeral, and Infinispan persistence mechanisms, that can be switched by setting the JOBS_SERVICE_PERSISTENCE environment variable to any of these values postgresql, ephemeral, or infinispan. If not set, it defaults to the ephemeral option.

|

The kogito-jobs-service-allinone image is a composite packaging that include one different image per each persistence mechanism, making it clearly bigger sized than the individual ones. If that size represents an issue in your installation you can use the individual ones instead. Finally, if you use this alternative, the JOBS_SERVICE_PERSISTENCE must not be used, since the persistence mechanism is auto-determined. These are the individual images: kogito-jobs-service-postgresql, kogito-jobs-service-ephemeral, or kogito-jobs-service-infinispan |

PostgreSQL

PostgreSQL is the recommended database to use with the Job Service. Additionally, it provides an initialization procedure that integrates Flyway for the database initialization. Which automatically controls the database schema, in this way, the tables are created or updated by the service when required.

In case you need to externally control the database schema, you can check and apply the DDL scripts for the Job Service in the same way as described in Manually executing scripts guide.

To configure the PostgreSQL persistence you must provide these configurations:

| Variable | Description | Example value |

|---|---|---|

|

Configure the persistence mechanism that must be used. |

|

|

Username to connect to the database. |

|

|

Password to connect to the database |

|

|

JDBC datasource url used by Flyway to connect to the database. |

|

|

Reactive datasource url used by the Job Service to connect to the database. |

|

| Variable | Description | Example value |

|---|---|---|

|

Always an environment variable |

|

|

Username to connect to the database. |

|

|

Password to connect to the database |

|

|

JDBC datasource url used by Flyway to connect to the database. |

|

|

Reactive datasource url used by the Job Service to connect to the database. |

|

The timeouts showcase example Quarkus Workflow Project with standalone services, shows how to run a PostgreSQL based Job Service as a Kubernetes deployment. In your local environment you might have to change some of these values to point to your own PostgreSQL database.

Ephemeral

The Ephemeral persistence mechanism is based on an embedded PostgresSQL database and does not require any external configuration. However, the database is recreated on each service restart, and thus, it must be used only for testing purposes.

| Variable | Description | Example value |

|---|---|---|

|

Configure the persistence mechanism that must be used. |

|

|

If the image is started by not configuring any persistence mechanism, the Ephemeral will be defaulted. |

Infinispan

To configure the Infinispan persistence you must provide these configurations:

| Variable | Description | Example value |

|---|---|---|

|

Configure the persistence mechanism that must be used. |

|

|

Sets the host name/port to connect to. Each one is separated by a semicolon. |

|

|

Enables or disables authentication. Set it to |

The enablement of this parameter depends on your local infinispan installation. If not set, the default value is |

|

Sets SASL mechanism used by authentication. For more information about this parameter, see Quarkus Infinispan Client Reference. |

When the authentication is enabled the default value is |

|

Sets realm used by authentication. |

When the authentication is enabled the default value is |

|

Sets username used by authentication. |

Use this property if the authentication is enabled. |

|

Sets password used by authentication. |

Use this property if the authentication is enabled. |

| Variable | Description | Example value |

|---|---|---|

|

Always an environment variable |

|

|

Sets the host name/port to connect to. Each one is separated by a semicolon. |

|

|

Enables or disables authentication. Set it to |

The enablement of this parameter depends on your local infinispan installation. If not set, the default value is |

|

Sets SASL mechanism used by authentication. For more information about this parameter, see Quarkus Infinispan Client Reference. |

When the authentication is enabled the default value is |

|

Sets realm used by authentication. |

When the authentication is enabled the default value is |

|

Sets username used by authentication. |

Use this property if the authentication is enabled. |

|

Sets password used by authentication. |

Use this property if the authentication is enabled. |

|

The Infinispan client configuration parameters that you must configure depends on your local Infinispan service. And thus, the table above shows only a sub-set of all the available options. To see the list of all the options supported by the quarkus infinispan client you must read the Quarkus Infinispan Client Reference. |

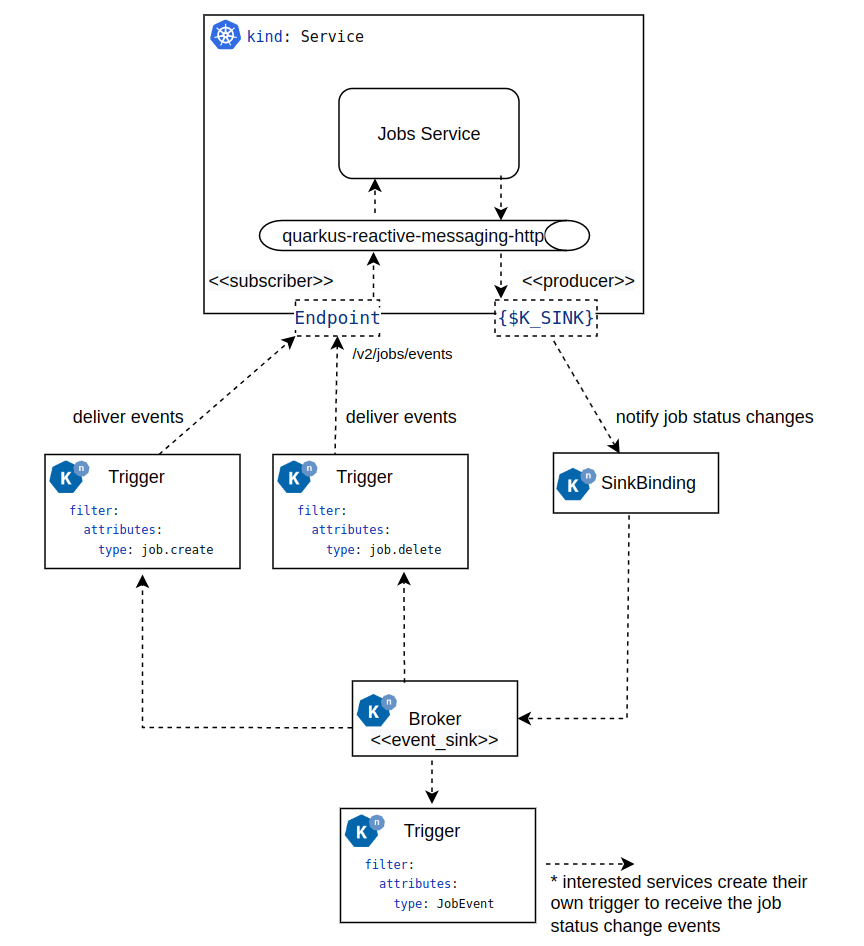

Eventing API

The Job Service provides a Cloud Event based API that can be used to create and delete jobs. This API is useful in deployment scenarios where you want to use an event based communication from the workflow runtime to the Job Service. For the transport of these events you can use the knative eventing system or the kafka messaging system.

Knative eventing

By default, the Job Service Eventing API is prepared to work in a knative eventing system. This means that by adding no additional configurations parameters, it’ll be able to receive cloud events via the knative eventing system to manage the jobs. However, you must still prepare your knative eventing environment to ensure these events are properly delivered to the Job Service, see knative eventing supporting resources.

Finally, the only configuration parameter that you must set, when needed, is to enable the propagation of the Job Status Change events, for example, if you want to register these events in the Data Index Service.

| Variable | Description | Default value |

|---|---|---|

|

|

|

| Variable | Description | Default value |

|---|---|---|

|

|

|

Knative eventing supporting resources

To ensure the Job Service receives the knative events to manage the jobs, you must create the create job events and delete job events triggers shown in the diagram below. Additionally, if you have enabled the Job Status Change events propagation you must create the sink binding.

The following snippets shows an example on how you can configure these resources. Consider that these configurations might need to be adjusted to your local kubernetes cluster.

|

We recommend that you visit this example Quarkus Workflow Project with standalone services to see a full setup of all these configurations. |

apiVersion: eventing.knative.dev/v1

kind: Trigger

metadata:

name: jobs-service-postgresql-create-job-trigger

spec:

broker: default

filter:

attributes:

type: job.create

subscriber:

ref:

apiVersion: v1

kind: Service

name: jobs-service-postgresql

uri: /v2/jobs/eventsapiVersion: eventing.knative.dev/v1

kind: Trigger

metadata:

name: jobs-service-postgresql-delete-job-trigger

spec:

broker: default

filter:

attributes:

type: job.delete

subscriber:

ref:

apiVersion: v1

kind: Service

name: jobs-service-postgresql

uri: /v2/jobs/eventsFor more information about triggers, see Knative Triggers.

apiVersion: sources.knative.dev/v1

kind: SinkBinding

metadata:

name: jobs-service-postgresql-sb

spec:

sink:

ref:

apiVersion: eventing.knative.dev/v1

kind: Broker

name: default

subject:

apiVersion: apps/v1

kind: Deployment

selector:

matchLabels:

app.kubernetes.io/name: jobs-service-postgresql

app.kubernetes.io/version: 2.0.0-SNAPSHOTFor more information about sink bindings, see Knative Sink Bindings.

Kafka messaging

To enable the Job Service Eventing API via the Kafka messaging system you must provide these configurations:

| Variable | Description | Default value |

|---|---|---|

|

Set the quarkus profile with the value |

By default the kafka eventing api is disabled. |

|

|

|

|

A comma-separated list of host:port to use for establishing the initial connection to the Kafka cluster. |

|

|

Kafka topic for events API incoming events. I general you don’t need to change this value. |

|

|

Kafka topic for job status change outgoing events. I general you don’t need to change this value. |

|

| Variable | Description | Default value |

|---|---|---|

quarkus.profile |

Set the quarkus profile with the value |

By default the kafka eventing api is disabled. |

|

|

|

|

A comma-separated list of host:port to use for establishing the initial connection to the Kafka cluster. |

|

|

Kafka topic for events API incoming events. I general you don’t need to change this value. |

|

|

Kafka topic for job status change outgoing events. I general you don’t need to change this value. |

|

|

Depending on your Kafka messaging system configuration you might need to apply additional Kafka configurations to connect to the Kafka broker, etc. To see the list of all the supported configurations you must read the Quarkus Apache Kafka Reference Guide. |

Leader election

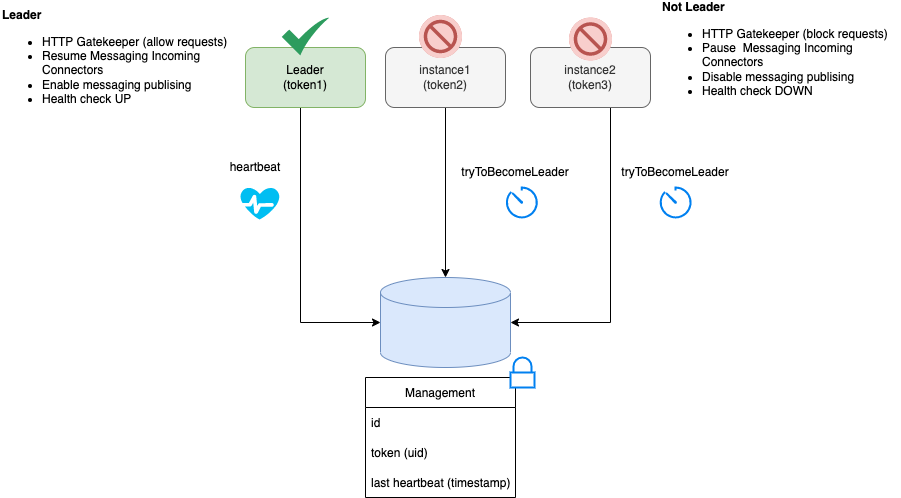

Currently, the Job Service is a singleton service, and thus, just one active instance of the service can be scheduling and executing the jobs.

To avoid issues when it is deployed in the cloud, where it is common to eventually have more than one instance deployed, the Job Service supports a leader instance election process. Only the instance that becomes the leader activates the external communication to receive and schedule jobs.

All the instances that are not leaders, stay inactive in a wait state and try to become the leader continuously.

When a new instance of the service is started, it is not set as a leader at startup time but instead, it starts the process to become one.

When an instance that is the leader for any issue stays unresponsive or is shut down, one of the other running instances becomes the leader.

|

This leader election mechanism uses the underlying persistence backend, which currently is only supported in the PostgreSQL implementation. |

There is no need for any configuration to support this feature, the only requirement is to have the supported database with the data schema up-to-date as described in the PostgreSQL section.

In case the underlying persistence does not support this feature, you must guarantee that just one single instance of the Job Service is running at the same time.

Found an issue?

If you find an issue or any misleading information, please feel free to report it here. We really appreciate it!