Timeouts on events for Serverless Workflow

When you define a state in a serverless workflow, you can use the timeouts property to configure the maximum time to complete this state.

When that time is overdue, the state is considered timed-out, and the engine continues the execution from this state. The execution flow depends on the state type, for instance,

a transition to a next state.

All the properties you can use to configure state timeouts are described in the Serverless Workflow specification.

Event-based states can use the sub-property eventTimeout to configure the maximum time to wait for an event to arrive.

This property uses ISO 8601 data and time standard to specify a duration of time.

It follows the format PnDTnHnMn.nS with days considered to be exactly 24 hours.

For instance, PT15M configures 15 minutes, and P2DT3H4M defines 2 days, 3 hours and 4 minutes.

|

Event timeouts can not be defined as a specific point in time, but it should be an amount of time, a duration, which is considered to start when the referred state becomes active in the workflow. |

|

Kogito currently, has timeouts support only for Callback and Switch states with events. Other states will be included in the future releases. |

Callback state timeout

Callback state can be used when you need to execute an action, in general to call an external service, and wait for an asynchronous response in form of an event, the callback.

Once the response event is consumed, the workflow continues the execution, in general moving to the next state defined in the `transition property. See more on Callback state in Serverless Workflow.

Since the callback state halts the execution util the event is consumed, you can define an eventTimeout for it, and in case the event does not arrive in the defined duration time, the workflow continues the execution moving to the next state defined in the transition, see the example.

{

"name": "CallbackState",

"type": "callback",

"action": {

"name": "callbackAction",

"functionRef": {

"refName": "callbackFunction",

"arguments": {

"input": "${\"callback-state-timeouts: \" + $WORKFLOW.instanceId + \" has executed the callbackFunction.\"}"

}

}

},

"eventRef": "callbackEvent",

"transition": "CheckEventArrival",

"onErrors": [

{

"errorRef": "callbackError",

"transition": "FinalizeWithError"

}

],

"timeouts": {

"eventTimeout": "PT30S"

}

}Switch state timeout

The switch state can be used when you need to take an action based on conditions, defined with the eventConditions property, where the workflow execution waits to make a decision depending on the events to be consumed and matched, defined through event definition.

In this situation, you can define an event timeout, that controls the maximum time to wait for an event to match the conditions, if this time is expired, the workflow moves to the state defined in the defaultCondition property of the switch state, as you can see in the example.

See more details about this state on the Serverless Workflow specification.

{

"name": "ChooseOnEvent",

"type": "switch",

"eventConditions": [

{

"eventRef": "visaApprovedEvent",

"transition": "ApprovedVisa"

},

{

"eventRef": "visaDeniedEvent",

"transition": "DeniedVisa"

}

],

"defaultCondition": {

"transition": "HandleNoVisaDecision"

},

"timeouts": {

"eventTimeout": "PT5S"

}

}Event state timeout

The event state is used to wait for one or more events to be received by the workflow and then continue the execution.

If the event state is a starting state, a new workflow instance is created.

|

The event state is not supported as a starting state if the |

The timeouts property is used for this state to configure the maximum time the workflow should wait for the defined events to arrive.

If this time is exceeded and the events are not received, the workflow moves to the state defined in the transition property or ends the workflow instance without performing any actions in case of an end state.

You can see this in the example.

For more information about event state timeout, see Serverless Workflow specification.

{

"name": "WaitForEvent",

"type": "event",

"onEvents": [

{

"eventRefs": [

"event1"

],

"eventDataFilter": {

"data": "${ \"The event1 was received.\" }",

"toStateData": "${ .exitMessage }"

},

"actions": [

{

"name": "printAfterEvent1",

"functionRef": {

"refName": "systemOut",

"arguments": {

"message": "${\"event-state-timeouts: \" + $WORKFLOW.instanceId + \" executing actions for event1.\"}"

}

}

}

]

},

{

"eventRefs": [

"event2"

],

"eventDataFilter": {

"data": "${ \"The event2 was received.\" }",

"toStateData": "${ .exitMessage }"

},

"actions": [

{

"name": "printAfterEvent2",

"functionRef": {

"refName": "systemOut",

"arguments": {

"message": "${\"event-state-timeouts: \" + $WORKFLOW.instanceId + \" executing actions for event2.\"}"

}

}

}

]

}

],

"timeouts": {

"eventTimeout": "PT30S"

},

"transition": "PrintExitMessage"

}Deploying a timed-based workflow

In order to deploy a workflow that contains timeouts or any other timer-based action, it is necessary to have Job Service running in your environment, which is an external service responsible to control the workflows timers, see the section for more information. In the timeout example you can see the details of how set up a knative infrastructure with the workflow and job service running.

Job Service configuration

All timer-related actions that might be declared in a workflow, are handled by a supporting service, called Job Service, which is responsible for managing, scheduling, and firing all actions (jobs) to be executed in the workflows.

Suppose the workflow service is not configured to use job service or there is no such service running. In that case, all timer-related actions use an embedded in-memory implementation of job service, which should not be used in production, since when the application shutdown, all timers are lost, which in a serverless architecture is a very common behavior with the scale to zero approach. That said, the no job service configuration can only be used for testing or development, but not for production.

The main goal of the Job Service is to work with only active jobs. The Job Service tracks only the jobs that are scheduled and that need to be executed. When a job reaches a final state, the job is removed from the Job Service.

When configured in your environment, all the jobs information and status changes are sent to the Kogito Data

Index Service, where they can be indexed and made available by GraphQL queries.

|

Data index service and the support for jobs information will be available in future releases. |

Job Service persistence

An important configuration aspect of job service is the persistence mechanism, where all job information is stored in a database that makes this information durable upon service restarts and guarantees no information is lost.

PostgreSQL

PostgreSQL is the recommended database to use with job service. Additionally, it provides an initialization procedure that integrates Flyway for the database initialization. It automatically controls the database schema, in this way all tables are created by the service.

In case you need to externally control the database schema, you can check the Flyway SQL scripts in migration and apply them.

You need to set the proper configuration parameters when starting job service. The example shows how to run PostgreSQL as a Kubernetes deployment, but you can run it the way it fits in your environment, the important part is to set all the configuration parameters points to your running instance of PostgreSQL.

Job Service communication

|

The Job Service does not execute a job but triggers a callback that might be an HTTP request or a Cloud Event that is managed by the configured jobs addon in the workflow application. |

Knative Eventing

To configure the communication between the Job Service and the workflow runtime through the knative eventing system, you must provide a set of configurations.

The Job Service configuration is done through the deployment descriptor shown in the example.

Addon configuration in the workflow runtime

The communication from the workflow application with Job Service is done through an addon, which is responsible for publishing and consuming events related to timers.

When you run the workflow as a knative service, you must add the kogito-addons-quarkus-jobs-knative-eventing to your project and provide the proper configuration.

-

Dependency in the

pom.xml:

<dependency>

<groupId>org.kie.kogito</groupId>

<artifactId>kogito-addons-quarkus-jobs-knative-eventing</artifactId>

</dependency>-

Configuration parameters:

# Events produced by kogito-addons-quarkus-jobs-knative-eventing to program the timers on the Job Service.

mp.messaging.outgoing.kogito-job-service-job-request-events.connector=quarkus-http

mp.messaging.outgoing.kogito-job-service-job-request-events.url=${K_SINK:http://localhost:8280/jobs/events}

mp.messaging.outgoing.kogito-job-service-job-request-events.method=POST|

The |

Timeout showcase example

In the serverless-workflow-timeouts-showcase you can see an end-to-end example that contains a serverless workflow application with timeouts configured alongside Job Service running on Knative.

There are two workflows that showcase the timeouts usage in the Callback and Switch states.

Callback workflow

It is a simple workflow, where once the execution reaches the callback state it waits for the event callbackEvent to arrive and continue the execution.

{

"name": "callbackEvent",

"source": "",

"type": "callback_event_type"

}The timeout is configured with a maximum time 30 seconds to be waited by the workflow to receive the callbackEvent, in case it does not arrive in time, the execution moves, and the eventData variable remains null. See the callback state definition.

Switch workflow

The switch example is similar to the callback but once the execution reaches the state, it waits for one of the two configured events, visaDeniedEvent or visaApprovedEvent, to arrive, see the switch state definition.

If any of the configured events arrives before the timeout is overdue, the workflow execution moves to the next state defined in the corresponding transition.

If none of the events arrive before the timeout is overdue, the workflow then moves to the state defined in defaultCondition transition.

Event workflow

The event example is similar to the switch one but once the execution reaches the state, it waits for one of the configured events, event1 or event2, to arrive, see the event state definition.

If none of the configured events arrive before the timeout is overdue, the workflow execution moves to the next state defined in the corresponding transition property, skipping the events that were not received in time together with actions configured for them.

If one of the events arrives before the timeout is overdue, the workflow then moves to the state defined in transition, executing the corresponding event that has arrived in the state definition together with actions defined for it.

Running the example

To run the example you must have access to a kubernetes cluster running with Knative configured.

For simplicity, the example uses minikube, you can follow the steps described in the example’s readme.

|

All the descriptor files used to deploy the example infrastructure are present in the example. |

The database and Job Service deployment files are located under /kubernetes folder.

The descriptors related to the workflow application are generated after the build under /target/kubernetes.

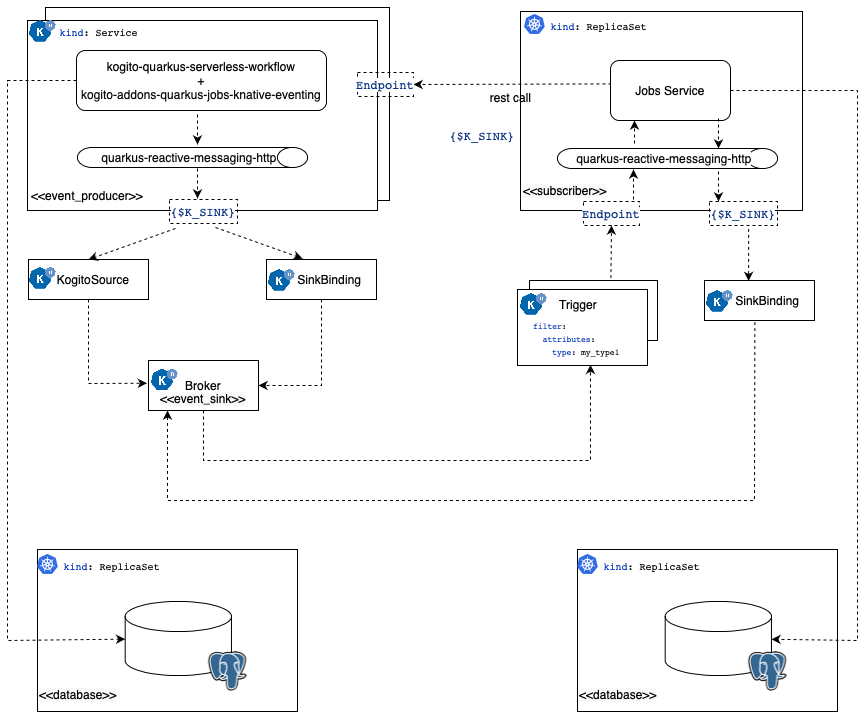

The following diagram shows the example’s architecture when it is deployed in the Kubernetes + Knative infrastructure.

Deploying the database

The workflow application and Job Service uses PostgreSQL as the persistence backend to store information about the workflow instances and jobs, respectively. In the example you can deploy a single database instance to be used on both, in a production environment is recommended to have independent database instances.

To run PostgreSQL you need to apply the following on the cluster:

kubectl apply -f kubernetes/timeouts-showcase-database.ymlsecret/timeouts-showcase-database created

deployment.apps/timeouts-showcase-database created

service/timeouts-showcase-database createdDeploying Job Service

kubectl apply -f kubernetes/jobs-service-postgresql.ymlservice/jobs-service-postgresql created

deployment.apps/jobs-service-postgresql created

trigger.eventing.knative.dev/jobs-service-postgresql-create-job-trigger created

trigger.eventing.knative.dev/jobs-service-postgresql-cancel-job-trigger created

sinkbinding.sources.knative.dev/jobs-service-postgresql-sb createdDeploying the timeout showcase workflow

You need to build the workflow with the knative maven profile, then the descriptor files are generated under the target/kubernetes folder, and the image is pushed in the container registry.

mvn clean install -Pknativekubectl apply -f target/kubernetes/knative.yml

kubectl apply -f target/kubernetes/kogito.ymlservice.serving.knative.dev/timeouts-showcase created

trigger.eventing.knative.dev/visa-denied-event-type-trigger-timeouts-showcase created

trigger.eventing.knative.dev/visa-approved-event-type-trigger-timeouts-showcase created

trigger.eventing.knative.dev/callback-event-type-trigger-timeouts-showcase created

sinkbinding.sources.knative.dev/sb-timeouts-showcase createdCreating a workflow instance

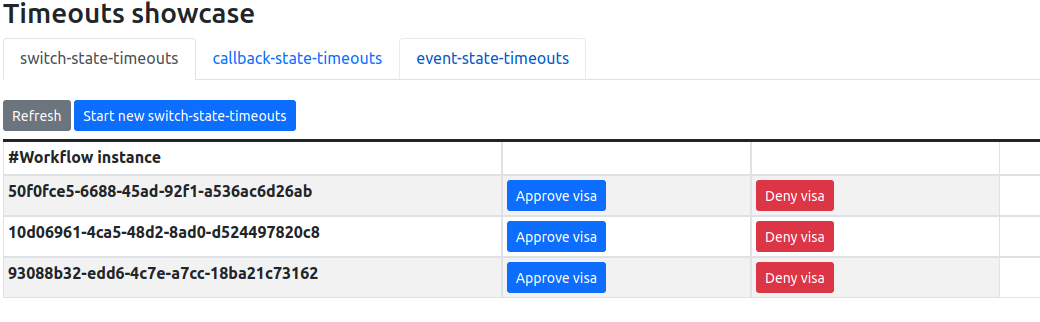

To create a workflow you can interact with the workflow using the provided REST APIs, in the example provide a test Web UI to make it easy to test.

First, you need to get the service URL on the cluster.

kn service list | grep timeouts-showcaseNAME URL LATEST AGE CONDITIONS READY REASON

timeouts-showcase http://timeouts-showcase.default.10.105.86.217.sslip.io timeouts-showcase-00001 3m50s 3 OK / 3 TrueUsing the showcase UI

The example Web UI is handy to interact with the workflow, you just need to open in the browser the URL you got from the previous step.

You can create new workflow instances and interact with them to complete, or simply wait for the timeout to be triggered to check it’s working. More details on the readme.

Using REST APIs

You can test the workflows using the REST APIs, in fact they are the same used by the Web UI in both workflows.

-

Callback

curl -X 'POST' \

'http://timeouts-showcase.default.10.105.86.217.sslip.io/callback_state_timeouts' \

-H 'accept: */*' \

-H 'Content-Type: application/json' \

-d '{}'-

Switch

curl -X 'POST' \

'http://timeouts-showcase.default.10.105.86.217.sslip.io/callback_state_timeouts' \

-H 'accept: */*' \

-H 'Content-Type: application/json' \

-d '{}'-

Event

curl -X 'POST' \

'http://timeouts-showcase.default.10.105.86.217.sslip.io/event_state_timeouts' \

-H 'accept: */*' \

-H 'Content-Type: application/json' \

-d '{}'-

Checking whether the workflow instance was created

curl -X 'GET' 'http://timeouts-showcase.default.10.105.86.217.sslip.io/switch_state_timeouts'The command will produce an output like this, which indicates that the process is waiting for an event to arrive.

[{"id":"2e8e1930-9bae-4d60-b364-6fbd61128f51","workflowdata":{}}]-

Checking the timeout was executed after 30 seconds:

curl -X 'GET' 'http://timeouts-showcase.default.10.105.86.217.sslip.io/switch_state_timeouts'

[]As you can see there are no active workflow instances, indicating the timeout was executed and the created instance was completed.

Found an issue?

If you find an issue or any misleading information, please feel free to report it here. We really appreciate it!